Import pandas as pd

df = pd.DataFrame({'key1': list('aabba'),

'key2': ['one', 'two', 'one', 'two', 'one'],

'data1': np.random.randn(5),

'data2': np.random.randn(5)})

df

grouped = df['data1'].groupby(df['key1'])

grouped.mean()

The grouping keys used above are Series. However, the grouping key can be any array of appropriate length.

states = np.array(['Ohio', 'California', 'California', 'Ohio', 'Ohio'])

years = np.array([2005, 2005, 2006, 2005, 2006])

df['data1'].groupby([states, years]).mean()

df.groupby('key1').mean()

It is noticeable that there is no key2 column in the result because df['key2'] contains non-numeric data and thus gets excluded. By default, all numeric columns are aggregated, although sometimes you may want to filter them into a subset.

Iterating over the groups:

for name, group in df.groupby('key1'):

print(name)

print(group)

You can see that 'name' represents the value of 'key1' in the group, while 'group' contains the corresponding rows.

Similarly:

for (k1, k2), group in df.groupby(['key1', 'key2']):

print('===k1,k2:')

print(k1, k2)

print('===k3:')

print(group)

You can perform operations on the grouped data, such as converting it into a dictionary.

piece = dict(list(df.groupby('key1')))

piece

{'a': data1 data2 key1 key2

0 -0.233405 -0.756316 a one

1 -0.232103 -0.095894 a two

4 1.056224 0.736629 a one, 'b': data1 data2 key1 key2

2 0.200875 0.598282 b one

3 -1.437782 0.107547 b two}

piece['a']

By default, groupby groups along axis=0. You can also group along other axes by specifying it.

grouped = df.groupby(df.dtypes, axis=1)

dict(list(grouped))

{dtype('float64'): data1 data2

0 -0.233405 -0.756316

1 -0.232103 -0.095894

2 0.200875 0.598282

3 -1.437782 0.107547

4 1.056224 0.736629, dtype('O'): key1 key2

0 a one

1 a two

2 b one

3 b two

4 a one}

Selecting one or multiple columns:

For large datasets, often only some columns need to be aggregated.

df.groupby(['key1','key2'])[['data2']].mean()

Group by dictionary or series:

people = pd.DataFrame(np.random.randn(5,5),

columns=list('abcde'),

index=['Joe','Steve', 'Wes', 'Jim', 'Travis'])

people.ix[2:3,['b','c']] = np.nan #Set a few nan

people

Known grouping relationship of columns:

mapping = {'a':'red', 'b': 'red', 'c': 'blue', 'd': 'blue', 'e': 'red', 'f': 'orange'}

by_column = people.groupby(mapping, axis=1)

by_column.sum()

If you don't add axis=1, only columns 'a', 'b', 'c', 'd', 'e' will appear.

The same applies to Series.

map_series = pd.Series(mapping)

map_series

a red

b red

c blue

d blue

e red

f orange

Dtype: object

people.groupby(map_series,axis=1).count()

Group by function:

Compared to dictionaries or Series, using Python functions to define grouping relationships can be more creative. Any function that acts as a grouping key will be called once for each index, and its return value will be used as the group name. For example, if you want to group by the length of a person's name, you can pass in len.

people.groupby(len).sum()

abcde

3 -1.308709 -2.353354 1.585584 2.908360 -1.267162

5 -0.688506 -0.187575 -0.048742 1.491272 -0.636704

6 0.110028 -0.932493 1.343791 -1.928363 -0.364745

Mixing functions with arrays, lists, dictionaries, and Series is not an issue since everything eventually gets converted to an array.

key_list = ['one','one','one','two','two']

people.groupby([len,key_list]).sum()

Group by index level:

The most convenient aspect of a hierarchical index is that it allows aggregation based on the index level. To do this, you can specify the level number or name via the 'level' keyword:

columns = pd.MultiIndex.from_arrays([['US','US','US','JP','JP'], [1,3,5,1,3]], names=['cty', 'tenor'])

hier_df = pd.DataFrame(np.random.randn(4,5), columns=columns)

hier_df

hier_df.groupby(level='cty', axis=1).count()

Data aggregation:

Calling a custom aggregate function:

Column-oriented multi-function application:

Aggregate operations on Series or DataFrame columns typically use aggregate or call mean, std, etc. Next, we want to apply different aggregate functions to different columns, or apply multiple functions at once.

grouped = Tips.groupby(['sex','smoker'])

grouped_pct = grouped['Tip_pct'] # Tip_pct

grouped_pct.agg('mean') # For the statistics described in the 9-1 icon, you can pass the function name directly as a string.

# If you pass in a set of functions, the column name of the obtained df will be named 12345 with the corresponding function.

The automatically given column name is low. If a list of (name, function) tuples is passed in, the first element of each tuple will be used as the column name of df.

For df, you can define a set of functions for all columns, or apply different functions in different columns.

If you want to apply different functions to different columns, the specific way is to agg a dictionary that maps from column names to functions.

Df can have hierarchical columns only when applying multiple functions to at least one column.

Group-level operations and transformations:

Aggregation is just one type of grouping operation, which is a special form of data transformation. Transform and apply are more versatile.

Transform will apply a function to each group and then place the results in the appropriate location. If each group produces a scalar value, the scalar value will be broadcast.

Transform is also a special function with strict conditions: the passed function can only produce two kinds of results, either a scalar value that can be broadcast (e.g., np.mean), or an array of the same size as the group.

People = pd.DataFrame(np.random.randn(5,5),

Columns=list('abcde'),

Index=['Joe','Steve', 'Wes', 'Jim', 'Travis'])

People

12345

Key = ['one','two','one','two','one']

People.groupby(key).mean()

People.groupby(key).transform(np.mean)

You can see that there are many values as in Table 2.

def demean(arr):

return arr - arr.mean()

demeaned = people.groupby(key).transform(demean)

demeaned

demeaned.groupby(key).mean()

The most general groupby method is apply.

Tips = pd.read_csv('C:\\Users\\ecaoyng\\Desktop\\work space\\Python\\py_for_analysis_code\\pydata-book-master\\ch08\ips.csv')

Tips[:5]

New generation of a column:

Tips['tip_pct'] = Tips['tip']/Tips['total_bill']

Tips[:6]

Select the top 5 tip_pct values based on the grouping:

def top(df,n=5,column='tip_pct'):

return df.sort_index(by=column)[-n:]

top(tips,n=6)

Group the smoker and apply the function:

Tips.groupby('smoker').apply(top)

Multi-parameter version:

Tips.groupby(['smoker','day']).apply(top,n=1,column='total_bill')

Quantile and bucket analysis:

Cut and qcut combined with groupby makes it easy to analyze the bucket or quantile of the dataset.

Frame = pd.DataFrame({'data1':np.random.randn(1000),

'data2': np.random.randn(1000)})

Frame[:5]

factor = pd.cut(frame.data1,4)

factor[:10]

0 (0.281, 2.00374]

1 (0.281, 2.00374]

2 (-3.172, -1.442)

3 (-1.442, 0.281)

4 (0.281, 2.00374]

5 (0.281, 2.00374]

6 (-1.442, 0.281)

7 (-1.442, 0.281)

8 (-1.442, 0.281)

9 (-1.442, 0.281)

Name: data1, dtype: category

Categories (4, object): [(-3.172, -1.442] " (-1.442, 0.281) " (0.281, 2.00374] " (2.00374, 3.727]]

def get_stats(group):

return {'min':group.min(), 'max':group.max(), 'count':group.count(), 'mean':group.mean()}

grouped = frame.data2.groupby(factor)

grouped.apply(get_stats).unstack()

These are buckets of equal length. To get equal-sized buckets based on the number of samples, use qcut.

Equal-length buckets: equal intervals

Equal-sized buckets: equal number of data points

grouping = pd.qcut(frame.data1,10, labels=False) # label=false can get the quantile number

grouped = frame.data2.groupby(grouping)

grouped.apply(get_stats).unstack()

Explosion Proof Quick Plug Connector

BX3 series of explosion-proof socket connector is applicable to the petroleum, machinery, chemical, mining, drilling, wharf, construction, can provide the power for the electric motor, electric welding machine, pump, compressor, outdoor lighting equipment.

Product design,compact structure, easy installation, high temperature, shock, anti-aging, has good insulating properties and mechanical properties, products built with trapezoidal hole rubber seal and clamp device can be used for different cable diameters, not afraid of the rain, in oil exploration, drilling, well field standardized electrical lines have been widely applied. Products meet the national standard GB3836-201 "electrical apparatus fo explosive gas atmosphere", after the inspection of the national explosion-proof electrical quality inspection, certificate of proof, explosion-proof marks CNEx19.1354X, EXnA II CT4Gc.

The product consists of two parts, plugs and sockets, which plug is removable (YT), there are three forms of socket options: 1,fixed (GZ), 2, straight-through (YZ), 3, hanging (GYZ). Connection plug and socket bayonet quick connect, contact with the wire ends to tighten the screws. Shell is made of PA66 insulation corrosion material, silver-plated contacts, access to reliable, easy wiring, sealing and insulation, waterproof, dustproof, shockproof and so on.

Main Technical Data

Operating temperature: -20 ℃ 60 ℃

Rated voltage: 0 - 500V Frequency: 50Hz - 60Hz

Rated current: 1OA/16A/25A/32A/40A/60A/63A/1OOA/125A/150A/200A/250A/300A/400A/500A/600A/630A

Withstand voltage: 1800VAC

Insulation resistance: > 100MO

Contact resistance :lower than 0.5 MO

Temperature rise: lower than 65K

Mechanical life: 500 times

Contact Poles: 3P/3P+E/3P+N+E

Housing protection: IP67

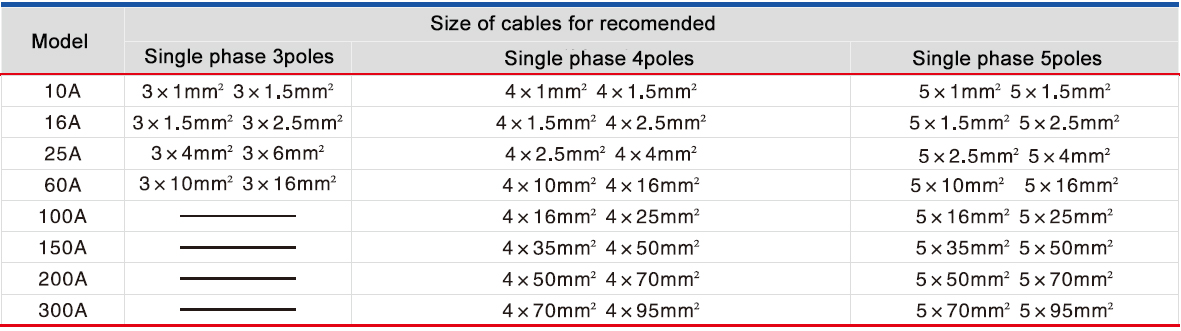

Recommend to use cables with suitabe specifications:

Product Selection Parameters:

Explosion Proof Plug Socket Connectors

Ningbo Bond Industrial Electric Co., Ltd. , https://www.bondelectro.com