Summary: When training a neural network, you usually need to define a loss function to tell the network how far it is from the target.

Three years ago, scholars such as Ian Goodfellow of the University of Montreal proposed the concept of "Generative Adversarial Networks" (GANs), which gradually attracted the attention of AI industry insiders. Since 2016, the academic and industry interest in GAN has been “blowoutâ€. Recently, Google's open source TFGAN lightweight tool library, it is reported that its original intention is to make training and evaluation of GAN easier.

When training a neural network, you usually need to define a loss function to tell the network how far it is from the target. For example, there is usually a loss function in the image classification network, which is penalized once the wrong classification is given. If a network mistakes a dog's photo as a cat, it will have a high loss value.

However, some problems cannot be easily defined with loss functions, especially when they involve human perception, such as image compression or text-to-speech systems.

Generative Confrontation Network (GAN) This machine learning technology has led us to advance in a wide range of applications, including text-based image generation, super-resolution, and robotic crawling solutions. However, GAN has introduced new challenges in both theory and software engineering, and it is difficult to keep up with this rapid research pace.

To make GAN-based experiments easier, Google chose open source TFGAN, a lightweight library designed to make training and evaluating GAN simple.

It provides the infrastructure to train GAN, also provides well-tested loss and evaluation metrics, and includes easy-to-use examples that can be seen to be extremely expressive and flexible. At the same time, Google has released a tutorial that includes a high-level API that can quickly train models based on their own data.

The figure above shows the effect of the loss of resistance on image compression. The top line is the image patch from the ImageNet dataset. In the middle, the image compression neural network with traditional loss training is used to compress and decompress the image. The bottom is the result of compressing and decompressing the image with a network of traditional loss and confrontational loss training.

Although the image based on GAN loss training is lost compared to the original image, the picture is clearer and contains more details than other methods.

TFGAN can support experiments in the following ways.

It provides a simple function call function that covers most of the GAN use cases, so you can train your model with your own data in just a few lines of code, and because it is built in a modular way, it can cover a more specific GAN design. .

You can use whatever modules you want - loss, evaluation, features, training, etc. These are independent. TFGAN's lightweight design means you can use it with other frameworks or native TensorFlow code.

The GAN model written in TFGAN can easily benefit from future infrastructure improvements, and you can choose from a large number of realized losses and eigenvalues ​​without having to rewrite.

Finally, the code is well tested, so you don't have to worry about numerical or statistical errors that are common when using the GAN library.

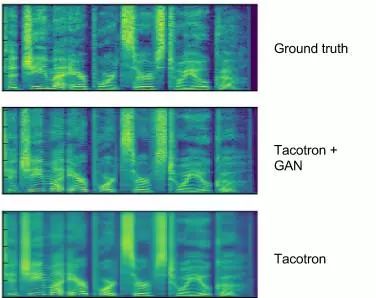

As shown above, most of the text-to-speech (TTS) nervous system produces a spectrogram that is too smooth. When applied to the Tacotron TTS system, GAN can recreate some of the more realistic textures, which will reduce artifacts in the output audio.

TFGAN's open source means you'll be using the same tools that many Google researchers use, and anyone can benefit from Google's most advanced improvements in the library.

Motor Start Switch,Single Phase Motor Starter Switches,Single Phase Centrifugal-Switches,Electric Machine Centrifugal Switch Gear

Ningbo Zhenhai Rongda Electrical Appliance Co., Ltd. , https://www.centrifugalswitch.com